(Technically, it wasn't hacked, it was 'tricked' into changing lanes)

Researchers have tried a simple trick that might make a Tesla to automatically drive into approaching traffic under certain conditions. The proof-of-concept manipulate works not by hacking into the car’s onboard computing system but by working with small, unnoticeable stickers that trick the Enhanced Autopilot of a Model S 75 into detecting and subsequently following a change in the current lane.

Tesla’s Enhanced Autopilot assists a range of features, including lane-centering, self-parking, and the ability to systematically change lanes with the driver’s confirmation. The feature is now mostly called “Autopilot” after Tesla rearranged the Autopilot price structure. It primarily depends on cameras, ultrasonic sensors, and radar to gather information about its environments, including close-by barriers, landscapes, and route changes. It then submits the data into onboard computers that use machine understanding to make judgments in real time about the most effective way to take action.

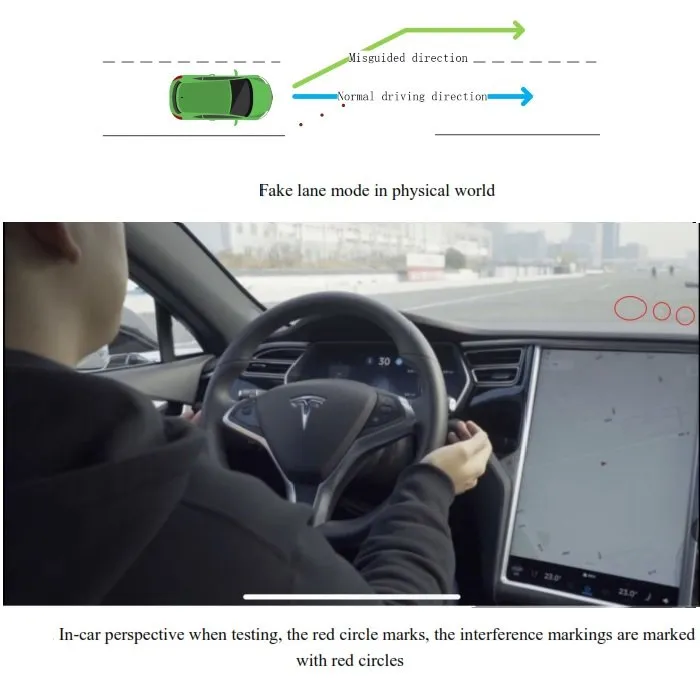

A team from Tencent’s Keen Security Lab recently reverse-engineered several of Tesla’s automated processes to see how they responded to changes in the environmental variables. One of the most impressive breakthroughs was a technique to cause Autopilot to steer into oncoming traffic. The attack worked by carefully attaching 3 stickers to the road surface. The stickers were nearly invisible to drivers, but machine-learning algorithms used by the Autopilot identified them as a line that suggested the lane was switching to the left. As a result, Autopilot steered in that direction.

In a detailed, 37-page report, the researchers wrote:

Tesla autopilot module’s lane recognition function has excellent robustness in an ordinary external environment (no intense light, snow, rain, dust and sand interference), but it still doesn’t handle the situation correctly in our test scenario. This kind of attack is straightforward to deploy, and the materials are easy to obtain. As we talked in the previous introduction of Tesla’s lane recognition function, Tesla uses a pure computer-vision solution for lane recognition, and we found in this attack experiment that the vehicle driving decision is only based on computer-vision lane recognition results. Our tests prove that this architecture has some security risks and reverse lane recognition is one of the necessary functions for autonomous driving in non-closed roads. In the scene we build, if the vehicle knows that the fake lane is pointing to the opposite lane, it should ignore this fake lane and then it could avoid a traffic accident.

Read more from original source: Tesla Autopilot Tricked: Is it a new challenge to Artificial Intelligence?