Hi everyone and happy new year 2022! While many are still enjoying their winter break, school has already restarted in France. Correspondingly, I restarted blogging about particle physics and cosmology on STEMsocial and Hive.

For this first blog of the year (on the day of my birthday by the way), I discuss one of my own research topics. This work gave rise to a first publication in 2020, a second one that is in the middle of the peer-review process, and a contribution to a conference proceedings.

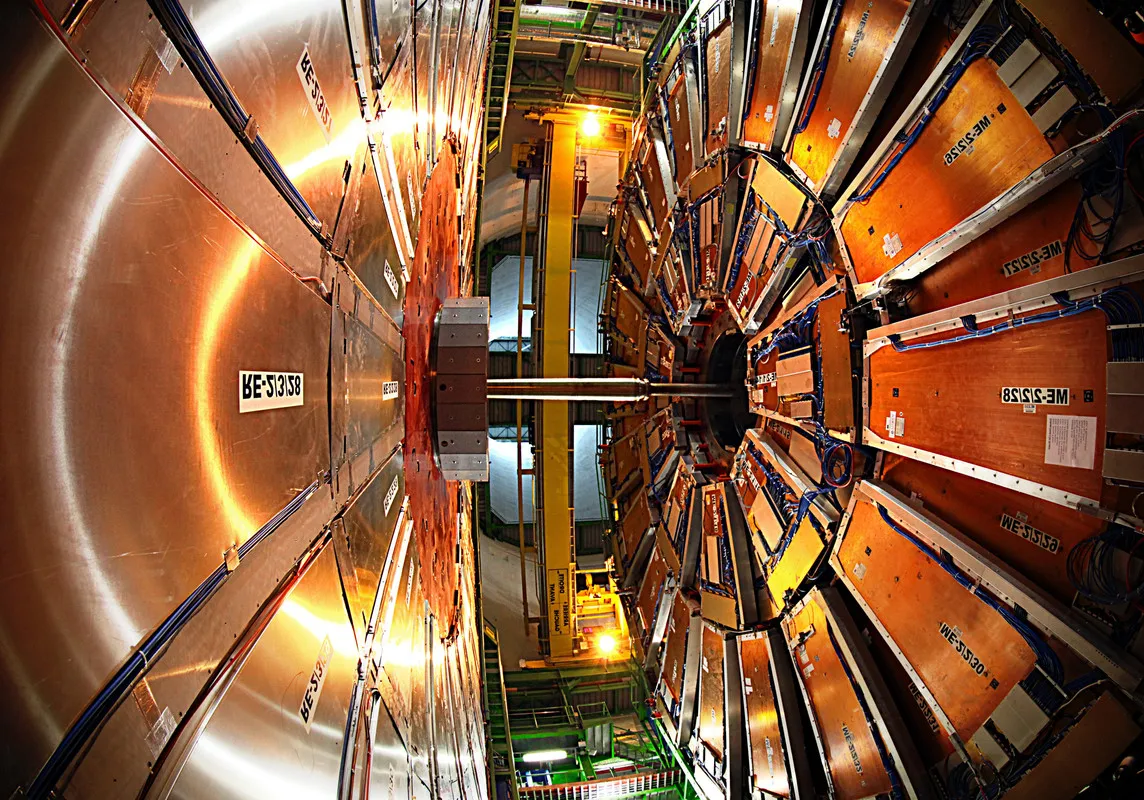

The subject of the day lies in the context of theoretical computations relevant for searches for new phenomena at the Large Hadron Collider at CERN. With my collaborators, we performed new calculations allowing for the best predictions for the production of hypothetical particles called leptoquarks. This last sentence naturally sets the scene for this blog, as I (purposely) managed to introduce several concepts that need to be explained/clarified.

Accordingly, this post will first discuss leptoquarks, and explain what they are, how they come from and how they are searched for. Next, it will focus on how predictions for the Large Hadron Collider work and how precision predictions can be achieved. Finally I will describe some of the findings of my publications. I will detail in particular how generic predictions cannot be made (in contrast to what was thought up to now), and that there could be large uncertainties associated even with the best predictions.

I will try to keep the content of this blog as simple as possible. I hence hope every reader will be able to take something home from it. Please do not hesitate to let me know whether it worked.

[Credits: CERN]

Leptoquarks in a nutshell

A few weeks ago, I shared a description of the microscopic world from the point of a view of a particle physicist (see here for details). In this description, I introduced the two classes of elementary particles that form the matter sector of the Standard Model, i.e. the quarks and leptons. More precisely, there are six quarks (up, down, strange, charm, bottom, top), three charged leptons (the electron, muon and tau) and three neutral leptons (the electron neutrino, the muon neutrino and the tau neutrino) in the Standard Model.

The main difference between these two types of particles is that quarks are sensitive to the strong interaction (one of the three fundamental forces included in the Standard Model), whereas leptons are not. Equivalently, we can say that in the Standard Model quarks interact among themselves through electromagnetic, weak and strong interactions. On the other hand, leptons interact among themselves via electromagnetic and weak interactions only. There is however no direct interaction simultaneously involving one quark and one lepton. The two classes of particles are somehow disconnected.

It is now time to move on with the topic of the day: leptoquarks. First of all, it is important to emphasise that leptoquarks are hypothetical particles. This means that they are not part of the Standard Model and that they have not been observed in data (at least up to now). There are however many good motivations behind them, which I will detail below.

Before doing so, let’s have a look to the word leptoquark itself. We can find both lepton and quark inside it. And there is a very good reason for this: a leptoquark is a hypothetical particle that simultaneously interacts with one lepton and one quark. In addition, leptoquarks interact among themselves through electromagnetic, weak and strong interactions (as for quarks).

[Credits: mohamed_hassan (Pixabay)]

But why should we bother about leptoquarks in the first place? The main reasons have been detailed in this blog. The Standard Model of particle physics works very well, but there are good motivations to consider it as the tip of an iceberg that needs to be probed. Among the plethora of possible options for the hidden part of this iceberg, many predict the existence of leptoquarks.

For instance, when we try to unify all fundamental interactions and building blocks of matter (this is called Grand Unification), we automatically get leptoquarks in our way. Similarly, they naturally arise in certain technicolour or composite models. In those models, we add an extra strong force and new building blocks of matter, and the Higgs boson has a composite nature. The list does not end there, and we can cite may other classes of models in which leptoquarks arise. These include specific supersymmetric models or even low-energy realisations of string theory models.

As can be guessed from the previous paragraph, considering leptoquarks as serious candidates for new phenomena is quite motivated. Accordingly, they are currently actively searched for the Large Hadron Collider at CERN (i.e. the LHC).

Leptoquarks signals at the Large Hadron Collider

In high-energy proton-proton collisions such as those on-going at the LHC, the most efficient mechanism to produce leptoquarks is a mechanism in which they are pair-produced. It is indeed usually much easier to produce a pair of leptoquarks than a single leptoquark. The reason is that for the former case, the strong force is involved. It hence naturally leads to copious production. On the contrary, for the latter case we need to rely on the weak force. Single lepton quark production at colliders is thus associated with a rarer rate.

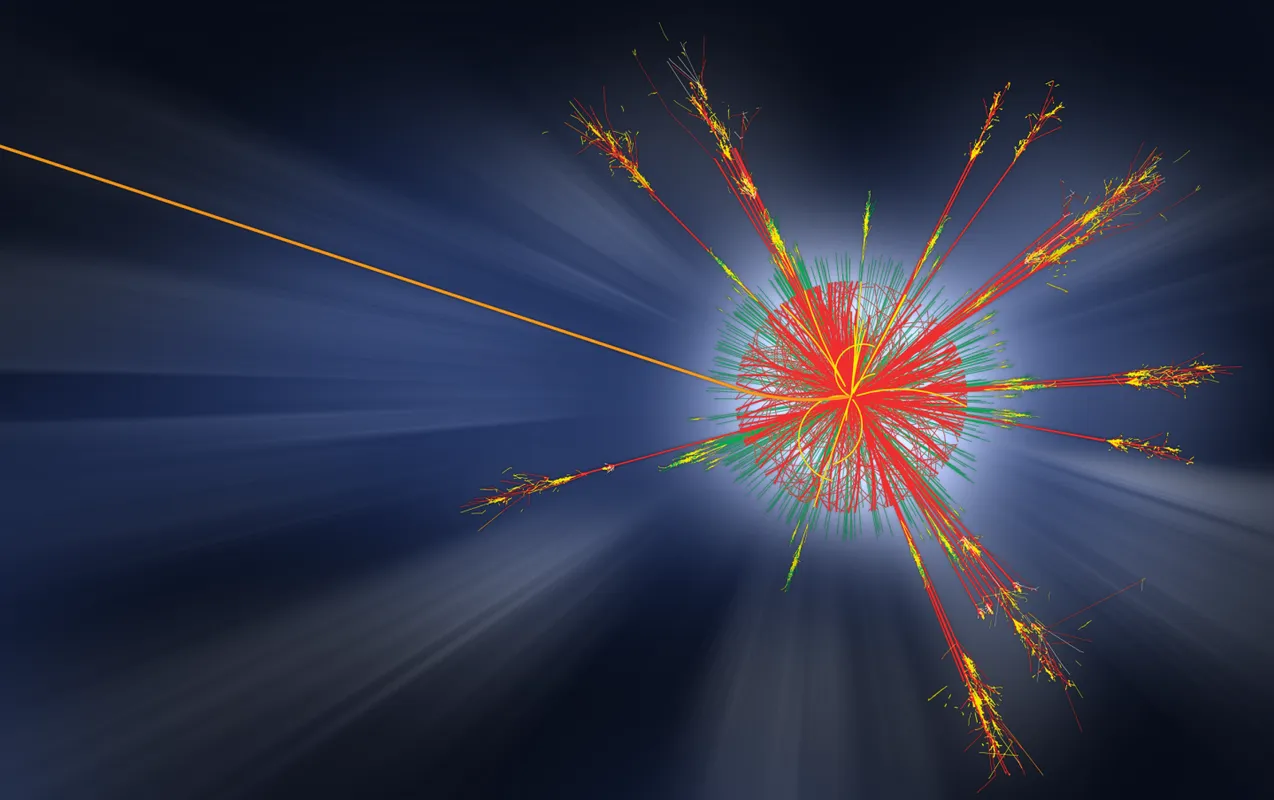

Leptoquarks are unstable and decay almost instantaneously once they are produced. If this was not the case, cosmology would be in big troubles. But into what would a leptoquark decay? The answer was already given earlier in this blog. A leptoquark by definition couples to a quark and a lepton. Consequently, it decays into a pair of particles comprising a lepton and a quark. Two leptoquarks freshly produced at the LHC would then decay into two leptons and two quarks (one lepton and one quark for each leptoquark). This dictates the typical LHC signatures to consider experimentally.

[Credits: ATLAS @ CERN]

As we have six quarks and six leptons in the Standard Model, there is a certain number of possibilities to look for pair-produced leptoquarks. Correspondingly, the ATLAS and CMS experiments look for leptoquark pair-production and decays in a variety of channels. For instance, we have searches for excesses of events (relative to the Standard Model expectation) featuring two top quarks and two tau leptons, two lighter quarks and two electrons, and so on.

Unfortunately, there is no sign of a leptoquark in data so far… Constraints are thus put on leptoquark models. One exception in those null search results could (I insist on the conditional tense) be the so-called flavour anomalies which I have already mentioned last month, but not blogged about yet (this will come soon; I promised it to @agmoore).

Those anomalies are connected to long-standing issues in data that are getting more and more solid with time, and that could soon be a proof of physics beyond the Standard Model. We are however not there yet, so that we only see these anomalies as a potential hint for physics beyond the Standard Model. Please do not hesitate to have a look to this blog to get more information on what it takes to get a discovery in particle physics.

In the case where we take those anomalies as something solid, we can make use of leptoquarks to explain them. For that reason, leptoquarks have become more and more attractive candidate for physics beyond the Standard Model during the last couple of years.

I mentioned above that the most efficient process to produce a pair of leptoquark was to rely on the strong interaction. This naturally leads us to the next part of this blog, in which I will describe how associated theory calculations are achieved. I will try to leave out from the discussion any too technical detail. I however need to be a bit technical too to convey somewhat precisely the novelty of my research work. Please do not hesitate to come back to me for clarifications, if needed.

Predictions for the Large Hadron Collider

In order to understand the achievement of my research work, it is important to get information about how we can calculate a production rate in particle physics. Equivalently, I will try to explain how we can estimate the occurence of collisions at the Large Hadron Collider in which two leptoquarks are produced and further decay.

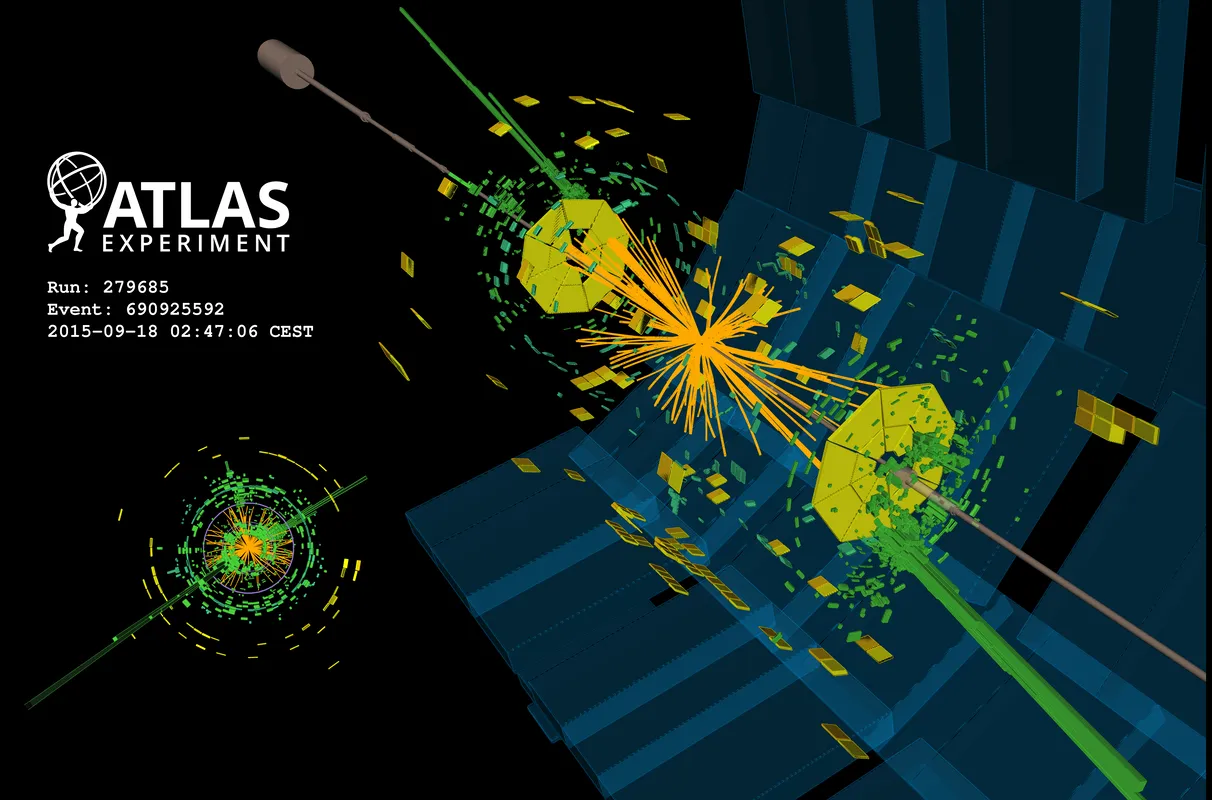

[Credits: CERN]

The first important concept to introduce is that of parton distribution functions. At the Large Hadron Collider, protons are collided. However, in any specific high-energy collision the objects that annihilate and give rise to the final state considered (a pair of leptoquarks here) are not the protons themselves, but their constituents (generically coined partons).

For instance, in order to produce a pair of leptoquarks, we may consider the annihilation of one quark (being one constituent of the first colliding proton) and one antiquark (being one constituent of the second colliding proton). Parton distribution functions then provide the way to connect the colliding protons to their colliding constituents.

The second ingredient in our computation is what we call the hard-scattering rate. This rate is that at which a given partonic initial state (a quark-antiquark pair for instance) will transform into a given final state (a pair of leptoquarks in our example). By ‘partonic’, we mean that we lie at the level of the constituents of the colliding protons and not the proton anymore. As already said, the connection between both is made by the parton distribution functions.

This rate can be computed by making use of the master equation of the theory. In the Standard Model, we thus use the master equation of the Standard Model. In the case of physics beyond the Standard Model, another master equation has to be used (for instance one including leptoquarks and their properties).

With these two ingredients, we are in principle capable to calculate the desired quantity. However, there is a catch. The microscopic world is quantum: this quantum nature implies that any prediction should include quantum corrections. This was already discussed in this blog in the context of the hierarchy problem of the Standard Model.

[Credits: IRFU]

When dealing with predictions of a production rate at the Large Hadron Collider, we can decide to compute the rate from the above two ingredients without considering quantum corrections at all. We may get some numbers for the predictions, but the missing bits lead to a computation possibly plagued with large uncertainties. In other words, we may get the right order of magnitude for the result, but not necessarily anything more precise than this.

In order to reduce those uncertainties, we need to include quantum corrections in the predictions. The latter can be organised into a next-to-leading-order piece, a next-to-next-to-leading-order piece, and so on (the leading-order contribution being obtained without any quantum correction). Let’s skip any details about how this organisation works (it is driven by the perturbative nature of the strong force). Instead, let’s keep in mind that when we add a next-order contribution to the calculation, the results become more and more precise, and therefore more and more reliable.

The next-to-leading-order component of the calculation is in principle not too difficult to evaluate. When I was a PhD student, it took me a whole year to compute it for a given process. However, with the advent of more efficient computing techniques, this can now be done in five minutes on any laptop. I may explain how we managed to do this in a future post if there is an interest in it.

For any sub-leading contribution, the story is nevertheless very different. We in fact only know them in specific cases and solely for a bunch of processes of the Standard Model. Therefore, for what concerns leptoquark pair production, only the next-to-leading-order contributions are known. This is the case since the end of the 1990s.

My research work: precision predictions for leptoquark pair production

In my recent scientific publications (here and there), we computed the most precise predictions for leptoquark pair-production to date. There were several improvement relative to what was done in the 1990s.

First, we improved the computation of the leading term of the production rate (i.e. predictions without any quantum corrections) by not only including contributions arising from strong interactions, but also from the specific leptoquark-quark-lepton interaction that was mentioned in the beginning of this blog (and that is neither dictated by the strong nor of the weak force). The latter was always assumed to be negligible for what concerned leptoquark pair production. However, in the light of the recent flavour anomalies, this is by far not necessarily the case anymore.

Second, we computed the next-to-leading-order corrections to the full leading-order production rate. In this way, we did not only reproduce the computations from the 1990s, but we additionally included corrections to contributions involving a leptoquark-quark-lepton interaction.

Our achievement does not however end there. We thirdly manage to consistently include bits of every single higher-order component to the rate, making the calculation as precise as could be with the knowledge of today.

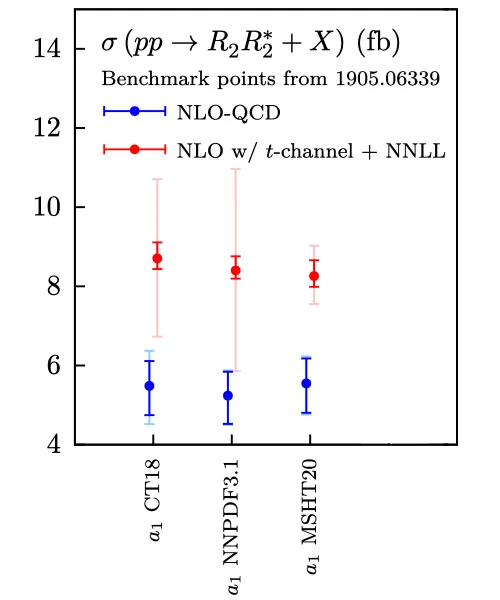

An example of our results is shown in the figure below, for a given leptoquark scenario that can explain the flavour anomalies (that are still undiscussed; I know).

[Credits: arXiv]

In blue, we have predictions as taken from the calculations of the 1990s. They consistently include leading-order and next-to-leading-order contributions, but after ignoring any effect associated with the leptoquark-quark-lepton coupling. The figure exhibits three sets of predictions, the quantity shown on the y-axis being the leptoquark production rate at the LHC in appropriate units.

For each of the three ‘blue predictions’, different parton distribution functions are employed. All of these three are very modern and widely used, and none is better than another. The differences in the predictions can then be taken as extra uncertainties on the results.

In red we have our new results. We can see that the predicted rates are almost 50% higher than what could be expected from the older calculations. This shows that previously neglected pieces of the calculations were not so negligible after all.

Moreover, the level of uncertainties (i.e. the size of the error bars) is strongly affected by the employed parton distribution functions. The gain in precision due to the newly added quantum corrections (comparing the solid blue and solid red error bars) is here tamed down by the loss in precision due to the parton distribution functions (comparing the pale blue and pale red error bars). This was unexpected.

For other leptoquark scenarios, we have even found that sometimes predictions relying on different parton distributions did not even agree with each other. The good news is that more LHC data is expected to cure this issue, as already visible on the rightmost prediction. This set of parton distribution function indeed uses a larger amount of available LHC data, relative to the two others that still need to be updated. In that case, the improvement cannot be missed.

Summary, take home message and TLDR version of this blog

It is now time to finalise this blog. The topic of today concerned one of my current research works, and was dedicated to the computation of the best possible predictions for leptoquark pair production at the Large Hadron Collider.

Leptoquarks are hypothetical particles that are sensitive to all fundamental forces. Moreover, they feature a special interaction involving simultaneously one quark and one lepton of the Standard Model. Leptoquarks have received an increasing attention during the recent years as they could provide an explanation for several anomalies seen in data.

In my work, we improved predictions relative to older calculations from the 1990s, and showed that the older results could be misleading in scenarios relevant in light of current data. We have shown that the production rates could be largely different (by several tens of percents in relative size) from what was expected, and that the associated uncertainties could be additionally larger.

Care must thus be made when driving conclusions from data. Today, both the ATLAS and CMS experiments already use our results in the context of their searches for leptoquarks. Precision is always welcome for a search, as it affects the associated limits (when no signal is found) or a discovery (when a signal is found). It hence offers in all cases more robust conclusions.

I hope you all enjoyed this post, in which I have tried to use a vocabulary that is as simple as possible. I hope the job has been well done. Please let me know (or ask for clarifications if needed).

See you next Monday!