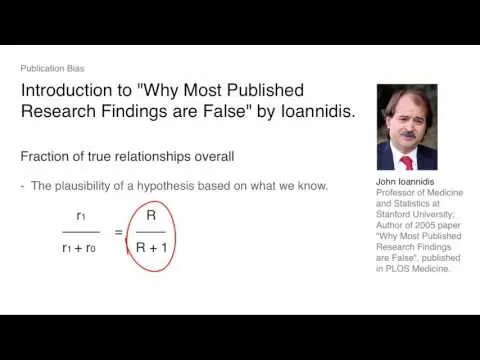

This is a very interesting 2005 paper by Standford University professor John Ioannidis on making a statistical model to estimate the likelihood that scientific findings are indeed true. Although many papers seek to find statistical correlations of hypotheses and the experimental outcomes, many don't first estimate the possibility there is no causal relation at all. In other words, if there is no actual causal relationship between the input and the results, then all measured positive findings are merely "accurate measures of the prevailing bias". For example, if there is no correlation between a specific gene and cancer, then any number of papers that state there is are just false positives. Incorporating such statistical possibilities, as well as modeling the general bias in any field, Ioannidis determined that most findings would likely be not-true. This issue becomes even more paramount in fields where there are many variables in both the input and results, such as in genetic microbiology and medicine. This paper provides a great thought experiment in making sense of such a large body of scientific papers and claims of findings, especially many contradictory findings.

https://journals.plos.org/plosmedicine/article?id=10.1371/journal.pmed.0020124

Digital object identifier (DOI): https://doi.org/10.1371/journal.pmed.0020124

PDF: PDF Link

Archive: Archive Link

MES local PDF: https://1drv.ms/b/s!As32ynv0LoaIiN171_oyGrcXWBgrwQ?e=bMNry1

3-Part Complimentary Video Series

For further breakdown, here is a good video series by Berkeley University going over Ioannidis' paper and methodology.